Diffusion From Scratch

Deep dive into diffusion models by building one from the ground up.

Overview

It always struck me as fascinating how diffusion models, especially Denoising Diffusion Probabilistic Models (DDPMs), are capable of generating such high-quality images. The underlying principles of these models are quite complex, and I wanted to understand them better. To achieve this, I decided to build a diffusion model from scratch.

This project was primarily a learning exercise for me. That is the reason why the repository is not fully polished or documented. There have been many experiments and iterations, and the code reflects that exploratory nature. That is why you might find some parts less structured or maybe even missing, as I created the repository mainly to share the core results of my learning journey.

Results

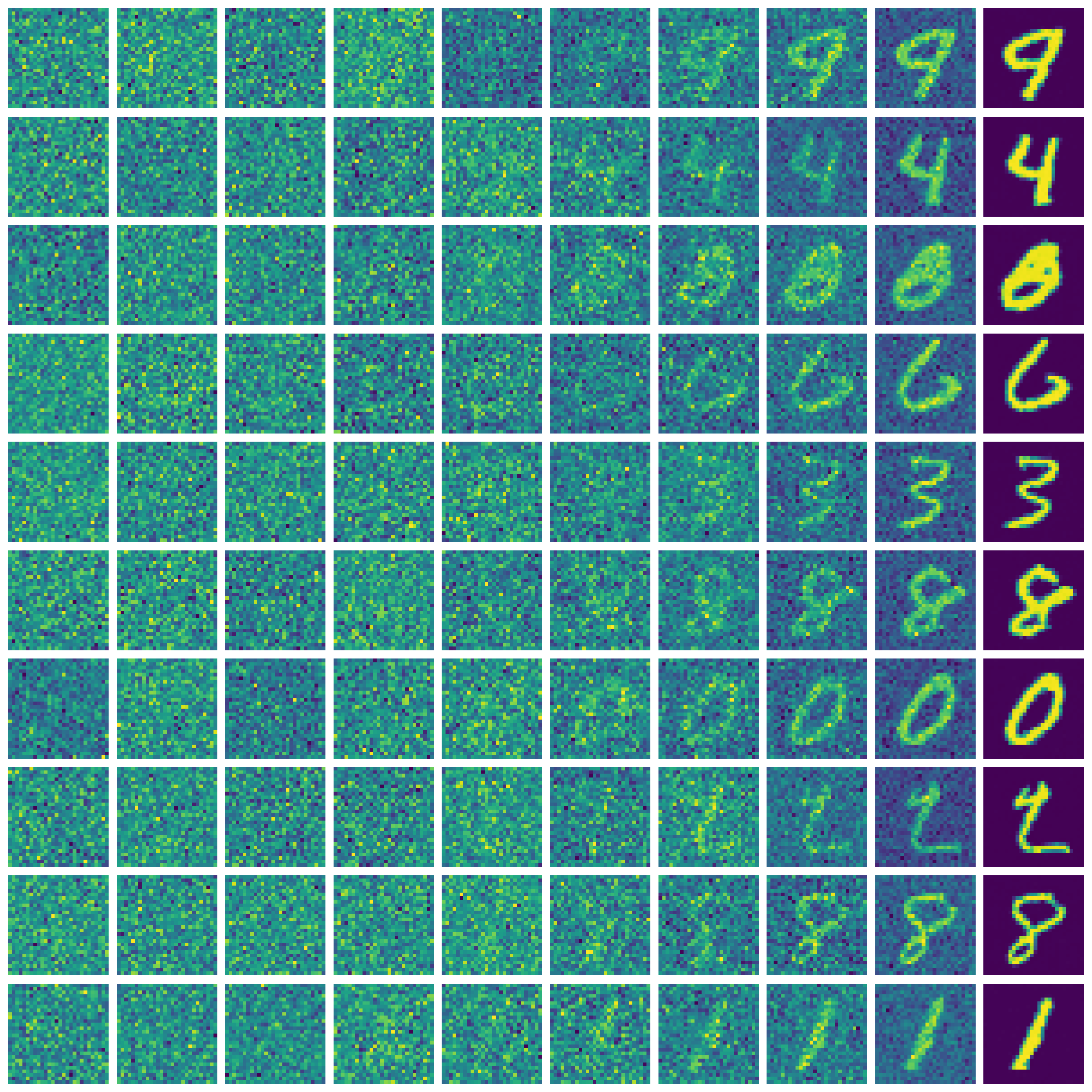

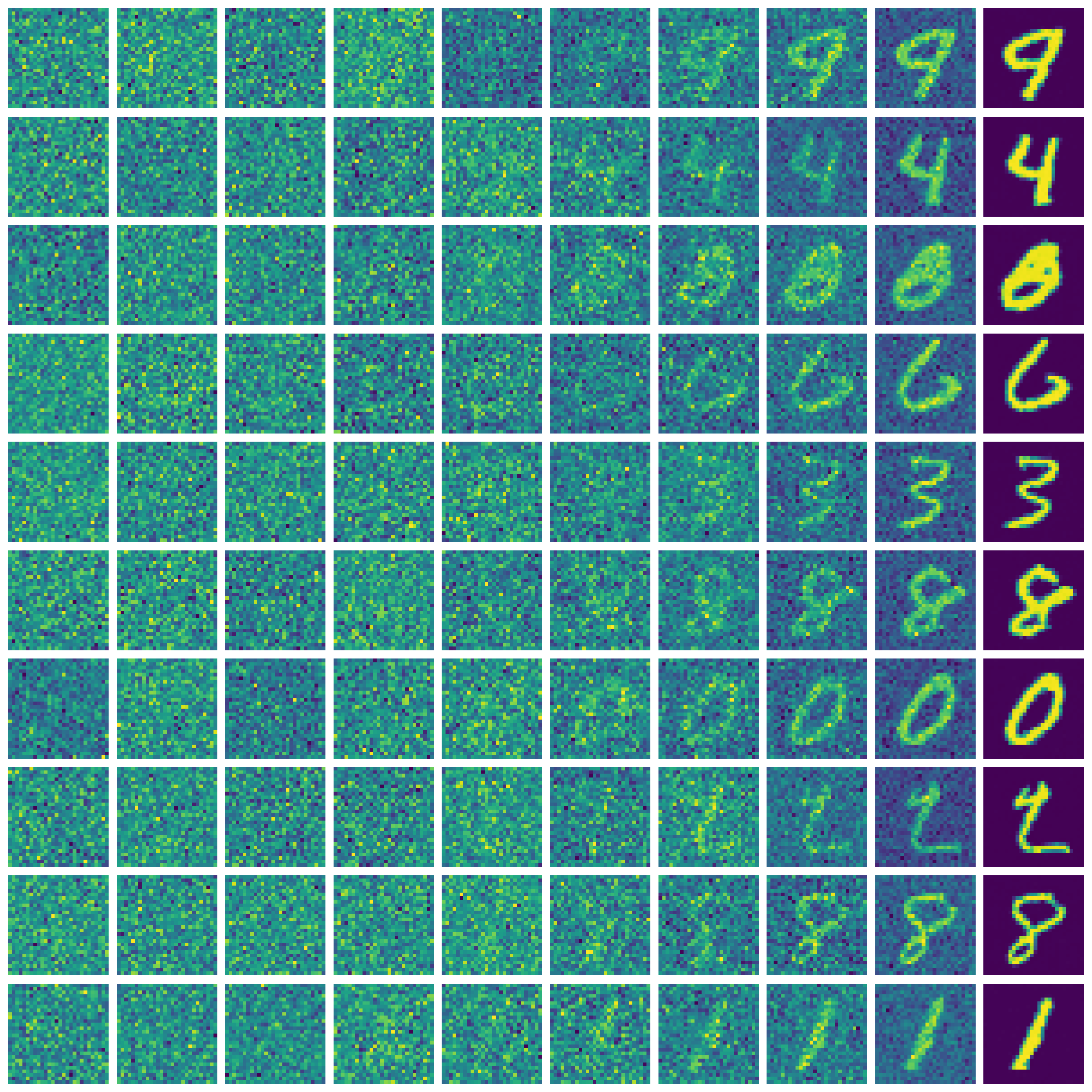

For a quick look at the results, you can check out the example outputs below. The model was first trained on the MNIST dataset, which is a collection of handwritten digits. While the dataset is relatively simple, it served as a good starting point. The model is not made to be able to take an input text prompt and generate an image based on that. I'll leave that for another time.

I later experimented with a different dataset, which I've seen used in this great resource: How Diffusion Models Work. It's a collection of sprites like from some old video game. The dataset is not big, but the images are more complex than MNIST. Here is a preview of the dataset:

![]()

The dataset had one small issue - many of the images were repeated with very slight variations. This could have led to the model overfitting on those specific images. That is why the results often collapsed to generating images similar to those in the dataset. I believe that with a more diverse dataset, the model could produce much better results. Anyway, here are some results from the model trained on this dataset:

![]()

Project Details

The project started with me trying out a simple idea without the understanding of how DDPM actually works. The core principle I played around was to add noise to an image and then train a neural network to remove that noise. As you could expect, the results were not very impressive, but not too terrible either. However, I learned a lot from this initial experiment. That gave me the motivation to dive deeper into the topic. I studied various resources, including research papers.

The final implementation is based on the principles outlined in the paper Denoising Diffusion Probabilistic Models. I also drew inspiration from various implementations available online.

Pitfalls

One of the main challenges I faced was understanding the mathematical foundations of diffusion models. The concepts of forward and reverse diffusion processes were not hard to understand. However, without the use of other key concepts (such as adding additional noise during the denoising process), the model was not able to learn to generate images of sufficient quality.

Fine-tuning the hyperparameters was another challenge. The choice of noise schedule, learning rate, and model architecture had a significant impact on the quality of the generated images. I had to experiment with various configurations to find a setup that worked. The model often produced blurry, low-quality images. Sometimes, it even converged to generating the same image repeatedly, indicating mode collapse. Those issues required me to revisit the training process and make adjustments.

Another challenge was the computational resources required to train the model. Diffusion models are known to be computationally intensive, and training them from scratch requires significant time and resources. I had to optimize the training process and make use of smaller architectures and datasets to make it feasible. Even then, I still had to rent some cloud GPU time to be able to train the model in a reasonable timeframe.